The Singularity Is Near: When Humans Transcend Biology (32 page)

Read The Singularity Is Near: When Humans Transcend Biology Online

Authors: Ray Kurzweil

Tags: #Non-Fiction, #Fringe Science, #Retail, #Technology, #Amazon.com

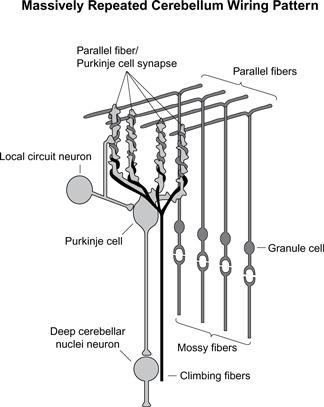

The gray and white, baseball-sized, bean-shaped brain region called the cerebellum sits on the brain stem and comprises more than half of the brain’s neurons. It provides a wide range of critical functions, including sensorimotor coordination, balance, control of movement tasks, and the ability to anticipate the results of actions (our own as well as those of other objects and persons).

77

Despite its diversity of functions and tasks, its synaptic and cell organization is extremely consistent, involving only several types of neurons. There appears to be a specific type of computation that it accomplishes.

78

Despite the uniformity of the cerebellum’s information processing, the broad range of its functions can be understood in terms of the variety of inputs it receives from the cerebral cortex (via the brain-stem nuclei and then through the cerebellum’s mossy fiber cells) and from other regions (particularly the “inferior

olive” region of the brain via the cerebellum’s climbing fiber cells). The cerebellum is responsible for our understanding of the timing and sequencing of sensory inputs as well as controlling our physical movements.

The cerebellum is also an example of how the brain’s considerable capacity greatly exceeds its compact genome. Most of the genome that is devoted to the brain describes the detailed structure of each type of neural cell (including its dendrites, spines, and synapses) and how these structures respond to stimulation and change. Relatively little genomic code is responsible for the actual “wiring.” In the cerebellum, the basic wiring method is repeated billions of times. It is clear that the genome does not provide specific information about each repetition of this cerebellar structure but rather specifies certain constraints as to how this structure is repeated (just as the genome does not specify the exact location of cells in other organs).

Some of the outputs of the cerebellum go to about two hundred thousand alpha motor neurons, which determine the final signals to the body’s approximately

six hundred muscles. Inputs to the alpha motor neurons do not directly specify the movements of each of these muscles but are coded in a more compact, as yet poorly understood, fashion. The final signals to the muscles are determined at lower levels of the nervous system, specifically in the brain stem and spinal cord.

79

Interestingly, this organization is taken to an extreme in the octopus, the central nervous system of which apparently sends very high-level commands to each of its arms (such as “grasp that object and bring it closer”), leaving it up to an independent peripheral nervous system in each arm to carry out the mission.

80

A great deal has been learned in recent years about the role of the cerebellum’s three principal nerve types. Neurons called “climbing fibers” appear to provide signals to train the cerebellum. Most of the output of the cerebellum comes from the large Purkinje cells (named for Johannes Purkinje, who identified the cell in 1837), each of which receives about two hundred thousand inputs (synapses), compared to the average of about one thousand for a typical neuron. The inputs come largely from the granule cells, which are the smallest neurons, packed about six million per square millimeter. Studies of the role of the cerebellum during the learning of handwriting movements by children show that the Purkinje cells actually sample the sequence of movements, with each one sensitive to a specific sample.

81

Obviously, the cerebellum requires continual perceptual guidance from the visual cortex. The researchers were able to link the structure of cerebellum cells to the observation that there is an inverse relationship between curvature and speed when doing handwriting—that is, you can write faster by drawing straight lines instead of detailed curves for each letter.

Detailed cell studies and animal studies have provided us with impressive mathematical descriptions of the physiology and organization of the synapses of the cerebellum,

82

as well as of the coding of information in its inputs and outputs, and of the transformations performed.

83

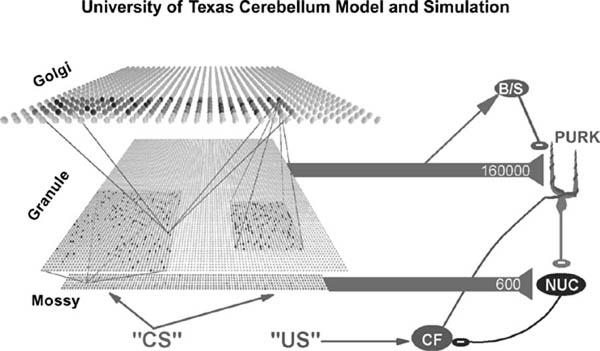

Gathering data from multiple studies, Javier F. Medina, Michael D. Mauk, and their colleagues at the University of Texas Medical School devised a detailed bottom-up simulation of the cerebellum. It features more than ten thousand simulated neurons and three hundred thousand synapses, and it includes all of the principal types of cerebellum cells.

84

The connections of the cells and synapses are determined by a computer, which “wires” the simulated cerebellar region by following constraints and rules, similar to the stochastic (random within restrictions) method used to wire the actual human brain from its genetic code.

85

It would not be difficult to expand the University of Texas cerebellar simulation to a larger number of synapses and cells.

The Texas researchers applied a classical learning experiment to their simulation and compared the results to many similar experiments on actual human conditioning. In the human studies, the task involved associating an auditory tone with a puff of air applied to the eyelid, which causes the eyelid to close. If the puff of air and the tone are presented together for one hundred to two hundred trials, the subject will learn the association and close the subject’s eye upon merely hearing the tone. If the tone is then presented many times without the air puff, the subject ultimately learns to disassociate the two stimuli (to “extinguish” the response), so the learning is bidirectional. After tuning a variety of parameters, the simulation provided a reasonable match to experimental results on

human and animal cerebellar conditioning. Interestingly, the researchers found that if they created simulated cerebellar lesions (by removing portions of the simulated cerebellar network), they got results similar to those obtained in experiments on rabbits that had received actual cerebellar lesions.

86

On account of the uniformity of this large region of the brain and the relative simplicity of its interneuronal wiring, its input-output transformations are relatively well understood, compared to those of other brain regions. Although the relevant equations still require refinement, this bottom-up simulation has proved quite impressive.

Another Example: Watts’s Model of the Auditory Regions

I believe that the way to create a brain-like intelligence is to build a real-time working model system, accurate in sufficient detail to express the essence of each computation that is being performed, and verify its correct operation against measurements of the real system. The model must run in real-time so that we will be forced to deal with inconvenient and complex real-world inputs that we might not otherwise think to present to it. The model must operate at sufficient resolution to be comparable to the real system, so that we build the right intuitions about what information is represented at each stage. Following Mead,

87

the model development necessarily begins at the boundaries of the system (i.e., the sensors) where the real system is well-understood, and then can advance into the less-understood regions. . . . In this way, the model can contribute fundamentally to our advancing understanding of the system, rather than simply mirroring the existing understanding. In the context of such great complexity, it is possible that the only practical way to understand the real system is to build a working model, from the sensors inward, building on our newly enabled ability to

visualize the complexity of the system

as we advance into it. Such an approach could be called

reverse-engineering of the brain. . .

. Note that I am not advocating a blind copying of structures whose purpose we don’t understand, like the legendary Icarus who naively attempted to build wings out of feathers and wax. Rather, I am advocating that we respect the complexity and richness that is already well-understood at low levels, before proceeding to higher levels.

—L

LOYD

W

ATTS

88

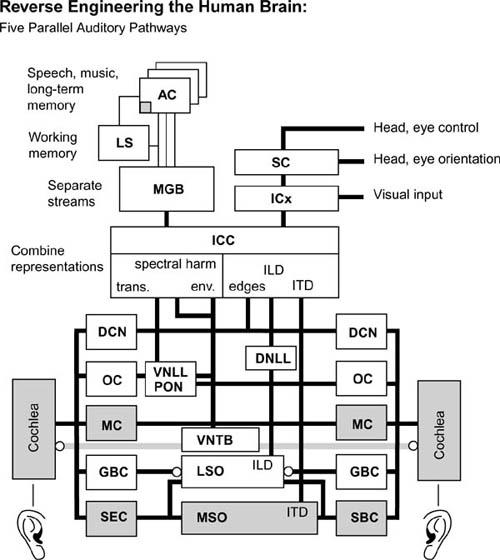

A major example of neuromorphic modeling of a region of the brain is the comprehensive replica of a significant portion of the human auditory-processing

system developed by Lloyd Watts and his colleagues.

89

It is based on neurobiological studies of specific neuron types as well as on information regarding interneuronal connection. The model, which has many of the same properties as human hearing and can locate and identify sounds, has five parallel paths of processing auditory information and includes the actual intermediate representations of this information at each stage of neural processing. Watts has implemented his model as real-time computer software which, though a work in progress, illustrates the feasibility of converting neurobiological models and brain connection data into working simulations. The software is not based on reproducing each individual neuron and connection, as is the cerebellum model described above, but rather the transformations performed by each region.

Watts’s software is capable of matching the intricacies that have been revealed in subtle experiments on human hearing and auditory discrimination. Watts has used his model as a preprocessor (front end) in speech recognition systems and has demonstrated its ability to pick out one speaker from background sounds (the “cocktail party effect”). This is an impressive feat of which humans are capable but up until now had not been feasible in automated speech-recognition systems.

90

Like human hearing, Watts’s cochlea model is endowed with spectral sensitivity (we hear better at certain frequencies), temporal responses (we are sensitive to the timing of sounds, which create the sensation of their spatial locations), masking, nonlinear frequency-dependent amplitude compression (which allows for greater dynamic range—the ability to hear both loud and quiet sounds), gain control (amplification), and other subtle features. The results it obtains are directly verifiable by biological and psychophysical data.

The next segment of the model is the cochlear nucleus, which Yale University professor of neuroscience and neurobiology Gordon M. Shepherd

91

has described as “one of the best understood regions of the brain.”

92

Watts’s simulation of the cochlear nucleus is based on work by E. Young that describes in detail “the essential cell types responsible for detecting spectral energy, broadband transients, fine tuning in spectral channels, enhancing sensitivity to temporary envelope in spectral channels, and spectral edges and notches, all while adjusting gain for optimum sensitivity within the limited dynamic range of the spiking neural code.”

93

The Watts model captures many other details, such as the interaural time difference (ITD) computed by the medial superior olive cells.

94

It also represents the interaural level difference (ILD) computed by the lateral superior olive cells and normalizations and adjustments made by the inferior colliculus cells.

95