Whole (12 page)

Authors: T. Colin Campbell

Second, it probably sounds pretty convincing, even if you don’t really understand it. Research like this seems airtight because it deals with objective facts—reactions, genetic mutations, and carcinogenesis—as opposed to messy things like human behavior and lifestyle. Only by excluding messy and complex reality can we make linear, causal statements about biological chain reactions.

Although we worked diligently on this series of studies for many years, obtained very impressive results, and published lots of professional papers, we were still left with a major unanswered question: did this finding— that higher dietary casein intake produced more cancer in rats—tell us anything about other proteins, chemical carcinogens, cancers, diseases, and species (e.g., humans)?

In other words, did this startling outlier result about dietary protein suggest that our love affair with animal protein was misguided and dangerous? Did cow’s milk in modest quantities promote cancer in humans? What about other diseases? Did other animal proteins have the same effect? While I tried for decades to answer these questions using reductionist tools, it gradually dawned on me that these questions often strayed beyond what reductionist science could answer. Not because you couldn’t set up experiments to compare the effects of a diet high in animal protein with other factors typically found in a WFPB diet. Those have been done, and the results are jaw-dropping (particularly the research and clinical experiences of Esselstyn, McDougall, Goldhamer, Barnard, and Ornish, some of which we touch on elsewhere in this book).

No, the problem with reductionist research is that it’s too easy to run experiments that show what appears to be just the opposite effect: that milk prevents cancer. That fish oil protects the brain. That lots of animal protein and fat stabilizes blood sugar and prevents obesity and diabetes. Because when you’re looking through a microscope, either literally or metaphorically, you can’t see the big picture. All you can see is a tiny bit

of the far larger truth, completely out of context. And whoever has the loudest megaphone—in this case, the ones shouting that milk and meat are necessary for optimal human health, whose megaphones are thoughtfully provided by the meat and dairy industries—have the most influence.

I’m sure that given enough time and money, I could conduct reductionist-style experiments that show health benefits for Coke, deep-fried Snickers bars (these are very popular at the North Carolina State Fair), and even AF (we actually showed such effects once in our lab

3

). I’d have to manipulate the sample (say, studying the effects of Coke on people dying of thirst in the Sahara, or the effects of a Snickers bar on the mortality rate of tired drivers at 2 a.m.). I could also measure hundreds of different biomarkers and report only on the outcomes that support my bias. Or, like the elephant examiners we met in

chapter four

, I could perform honest research and still end up with conclusions that are incomplete and misleading because of the limited scope of my vision.

This is why we so frequently see conflicting research results in the media: the predominant research framework actually

encourages

such conflicts. This same reductionist framework is also why our society’s beliefs about nutrition often seem so contradictory and confusing, whether we get them from textbooks, food packaging, or government messaging.

Though reductionism originates in the lab, it pervades the public imagination as much as it does the thinking of academics. Because we scientists and researchers are considered “experts,” our worldview permeates our culture’s understanding of nutrition at every level.

Pick up an elementary or high school nutrition textbook and you will inevitably find a list of known nutrients. There are about a dozen vitamins and minerals, perhaps as many as twenty to twenty-two amino acids, and three macronutrients (fat, carbohydrate, and protein). These chemicals and their effects are treated as the essence of nutrition: just get enough (but not too much) of each kind and you’re fine. It’s been that way for a long time. We’re brought up thinking of food in terms of the individual

elements that we need. We eat carrots for vitamin A and oranges for vitamin C, and drink milk for calcium and vitamin D.

If we like the particular food, we’re happy to get our nutrients from it. But if we don’t like that food—spinach, or Brussels sprouts, or sweet potatoes—we think it’s fine to skip it as long as we take a supplement with the same amounts of these nutrients. But even recent reductionist research has shown that supplementation doesn’t work. As it turns out, an apple does a lot more inside our bodies than all the known apple nutrients ingested in pill form. The whole apple is far more than the sum of its parts. Thanks to the reductionist worldview, however, we don’t really believe the food itself is important. Only the nutrients contained in the food matter.

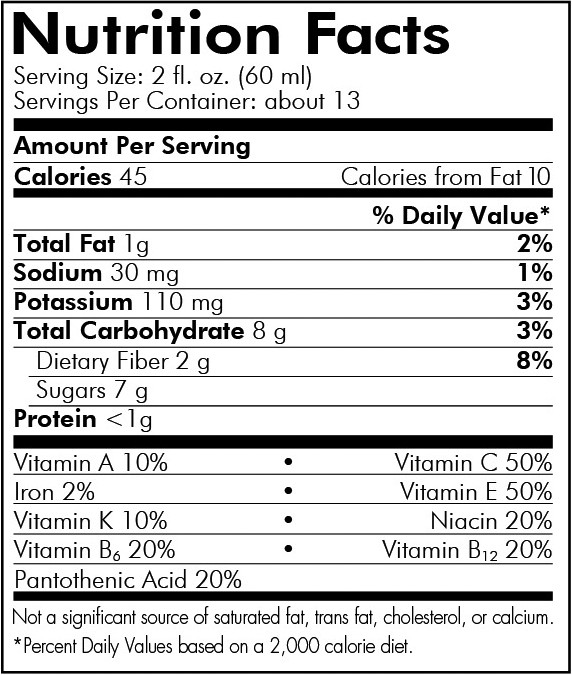

This belief is reinforced every time we read the labels on food packages. Sometimes these lists are quite extensive; the typical food label lists a lot of individual nutrients, with precise amounts per serving shown for each component (see

Figure 5-2

).

I was a member of the 1990 National Academy of Sciences (NAS) expert panel assigned by the FDA to standardize and simplify the food-labeling program. Two schools of thought existed on our panel. One view favored using the label to tell customers how much of each of the

many nutrients is inside. The other, to which I subscribed, intended to minimize quantitative information on the label. I believed that we would serve the public best by providing some general information, like a list of ingredients, while staying away from the finer details. (My school of thought lost, although our report did end up proposing a labeling model that was more focused than the original.)

FIGURE 5-2.

A typical example of a food label

4

Ingredients are important, and not just for avoiding ones to which you might be allergic. You probably don’t want to eat foods with long lists of unpronounceable words, and I assume you’d like to know if your breakfast cereal contains large quantities of high-fructose corn syrup. But including fine-print details like the number of micrograms of niacin performs two disservices to the public that can lead to poor eating choices. First, it overwhelms consumers and causes most of them to ignore the labels entirely. Second, it implies that the nutrients included on the label (a minuscule percentage of the total known nutrients) are the only important ones—indeed, perhaps the only ones that exist.

This isn’t the only way the government supports and furthers reductionist nutritional philosophy. A very public example is the effort expended for many years to develop a nutrient composition database that includes all known foods. Since the early 1960s, the U.S. Department of Agriculture has been working on an enormous database in which each food is accompanied by an extensive list of the nutrients it contains and their amounts. This database is now available on the Internet for the public’s use, at

http://ndb.nal.usda.gov

.

Government scientists have also promoted reductionist nutritional policy through their nutrient recommendations, which focus on the quantities of each nutrient deemed important for good health—and these nutrient recommendations have a much further reach than an online database. Every five years, the NAS’s Food and Nutrition Board reviews the latest science to update these recommendations. Generally known as recommended daily allowances (RDAs), they were revised in a 2002 report to provide not single-number RDAs, but ranges of intake to maximize health and minimize disease (now called recommended daily intakes, RDIs). Trouble is, RDIs still focus on individual nutrients. And these recommendations, expressed as numbers, now serve as quality control criteria for public nutrition initiatives like school lunch programs, hospital food guidelines, and other government-subsidized food service programs.

Armed with both these government recommendations and that vast nutritional database, consumers can now look up their RDIs and then cross-check them against the database to determine what foods to add or subtract in order to achieve proper nutrient intake. The RDI creators must wonder how our ancestors, without access to computers, were able to eat well enough to survive and reproduce!

Of course, nobody chooses their diet based on databases and RDIs. But quantifying foods this way reinforces the impression that this is the best way to understand nutrition, and the fear engendered by those reductionist tools leads many people to worry about not getting their daily nutrient allowances. Hence Americans spend $25-$30 billion or so each year (as of 2007) on nutrient supplements.

5

Many consider the use of these products to be the essence of modern nutrition. Similarly, foods have long been fortified with specific nutrients like iron, selenium, calcium, vitamin D, and iodine, because certain areas of the world or groups of people suffer from deficiencies of them. In the case of serious nutritional deficiencies, like nineteenth-century British sailors suffering from scurvy due to the lack of vitamin C, or impoverished Third World villagers dying from protein deficiency, attention to individual nutrients makes some sense. In the case of malnutrition, a supplement can save lives in the short run by buying time to set up longer-term systems that provide sufficient and balanced nutrition from real food. But for most Americans who suffer from too much food and too much granular information about that food, this approach is misguided. It overwhelms us and keeps us, in motivational speaker Jim Rohn’s memorable phrase, “majoring in minor things.”

In short, virtually all of us, professionals and laypeople alike, talk about nutrition, study nutrition, sell nutrition, and practice nutrition in reference to specific nutrients and, oftentimes, to specific quantities. We fixate on the

amounts.

Vitamins. Minerals. Fatty acids. And of course, the biggest obsession of them all: calories.

We’ve seen where this obsession comes from, and it’s easy enough to understand. After all, most people want to be healthy and feel good, and

we’re taught that our health partially depends on getting precisely the right amount of these things into our bodies. So whether it’s the obsessive calorie counting of Weight Watchers or the 40/40/30 absurdity of the Zone diet, we believe that the more accurately we track our inputs, the more control we have over the output: our health.

Unfortunately, that just isn’t true. Nutrition is not a mathematical equation in which two plus two is four. The food we put in our mouths doesn’t control our nutrition—not entirely. What our bodies do with that food does.

Are you sitting down? Because I need to explain something that almost no one acknowledges about nutrition: there is almost no direct relationship between the amount of a nutrient consumed at a meal and the amount that actually reaches its main site of action in the body—what is called its

bioavailability.

If, for example, I consume 100 milligrams of vitamin C at one meal, and 500 milligrams at a second meal, this does not mean that the second meal leads to five times as much vitamin C reaching the tissue where it works.

Does this sound like bad news? To reductionists, it certainly does. It means that we can never know exactly how much of a nutrient to ingest, because we can’t predict how much of it will be utilized. Uncertainty: a reductionist’s worst nightmare!

Actually, this is very good news. The reason we can’t predict how much of a nutrient will be absorbed and utilized by the body is that, within limits, it depends on what the body needs at that moment. Isn’t that amazing? In more scientific language, the proportion of a nutrient that is digested, absorbed, and provided to various tissues and the cells in those tissues is mostly dependent on the body’s need for that nutrient at that moment in time. This need is constantly “sensed” by the body and controlled by a variety of mechanisms that operate at various stages of the “pathway,” from nutrient ingestion to nutrient utilization. The body reigns supreme in choosing which nutrients it uses and which it discards unmetabolized. The pathway taken by a nutrient often branches, and branches further, and branches further again, leading the nutrient through a maze of reactions

that is far more complex and unpredictable than the simple linear model of reductionism would suggest.

The proportion of ingested beta-carotene that is actually converted into its most common metabolite, retinol (vitamin A), can vary as much as eight-fold. The proportion converted also decreases with increasing doses of beta-carotene, thus keeping the absolute amounts that are absorbed about the same. The percentage of calcium absorbed can vary by at least two-fold; the higher the calcium intake, the lower the proportion absorbed into the blood, ensuring adequate calcium for the body and no more. Iron bioavailability can vary anywhere from three-fold to as much as nineteen-fold. The same holds true for virtually every nutrient and related chemical.