The Numbers Behind NUMB3RS (21 page)

Read The Numbers Behind NUMB3RS Online

Authors: Keith Devlin

The average distance from Kevin Bacon for all actors in the study was 2.94. Accordingly, a conservative estimate of the distance between any two actors (obtained by adding their distances from Kevin Bacon) yields about 2 times 2.94âapproximately 6! Of course, this is conservative (Kevin Bacon may not be on the shortest path between two actors), but it also falls short of satisfying “six degrees of separation” for the actors-in-the-same-movie graph, since some actors already have a distance from Kevin Bacon that is greater than 6. (Of course, actors know many other actors they haven't appeared in a movie with.)

Mathematicians have a different heroâthe same Paul Erdös we met earlier. Erdös was one of the most prolific mathematicians of the twentieth century, writing more than 1,500 papers with more than 500 coauthors. In 2000, using data from sixty years of mathematical papers in research journals, Jerrold Grossman constructed a “mathematical collaboration graph” with 337,454 nodes (authors) and 496,489 edges connecting authors who wrote at least one paper together. The average degree is 3.92 and indeed there is one “giant component” containing 208,200 vertices, with the remaining 45,139 vertices contained in 16,883 components. The “Erdös number” of a mathematician is the shortest distance from that mathematician to Paul Erdös. By convention it is 0 for Erdös himself, 1 for the 500-plus mathematicians who wrote papers with him, 2 for those who wrote at least one paper with an Erdös coauthor, and so on. (Both authors of this book have an Erdös number of 2; Devlin actually wrote a paper with Erdös once, but it was never published, so it doesn't count.) At the time of Grossman's study, the average Erdös number for all published mathematicians was 4.7. The largest known Erdös number is 15.

AN EXAMPLE OF SUCCESSFULLY CONNECTING THE DOTS

One of the goals of social network analysis is to estimate which edges are missing in a graph constructed from incomplete information. For example, the “triad problem” concerns the phenomenon of “triangularity.” If A, B, and C are three nodes of a network, and it is known that a certain relationship exists between A and B and also between A and C, then there is some likelihood that the same relationshipâperhaps “knows” or “communicates with” or “works with”âexists between B and C also. Such likelihoods are best expressed as probabilities, and mathematicians try to determine how to estimate those probabilities based on all of the information available. For particular kinds of networks and relationships, detailed information about the connection between A and B and the connection between A and C can be used to make intelligent guesses about the probability of a relationship between B and C. Those guesses can be combined with other sources of information about a network in a way that enhances the ability of an analyst to identify the key nodes that deserve the greatest attention in further surveillance.

On June 7, 2006, during a meeting in an isolated safehouse near Baqubah, Iraq, Abu Musab al-Zarqawi, the leader of Al Qaeda in Iraq and the most-wanted terrorist in that war zone, was killed by bombs dropped by American F-16 fighter jets. Locating and killing al-Zarqawi, who had led a vicious terrorist campaign that included the capture and televised beheadings of American civilians working in Iraq, had been for several years an extremely high-priority goal of the governments of the United States, Iraq, and Jordan. Accordingly, considerable effort and manpower were devoted to tracking him down.

Although details of the methods used are closely guarded secrets, it is known that the movements and communications of a large network of al-Zarqawi's associates were monitored as closely as possible over a long period of time. One of those associates, Sheik Abdul Rahman, described as al-Zarqawi's “spiritual advisor,” was pinpointed and ultimately provided the critical link. As U.S. military spokesman Major General William Caldwell said,

Through a painstaking intelligence effort, we were able to start tracking him [Abdul Rahman], monitor his movements, and establish when he was doing his linkup with al-Zarqawiâ¦. It truly was a very long, painstaking, deliberate exploitation of intelligence, information gathering, human sources, electronics, and signal intelligence that was done over a period of timeâmany, many weeks.

One can only imagine what the network graphs constructed by U.S. intelligence analysts looked like, but evidently the key step was identifying and zeroing in on a node at distance 1 from the most important target.

11

The Prisoner's Dilemma, Risk Analysis, and Counterterrorism

In the first season of

NUMB3RS

, an episode called “Dirty Bomb,” broadcast on April 22, 2005, highlighted a very real, and scary, terrorism scenario: the threatened detonation of a “dirty bomb,” where radioactive material is packed around conventional explosive, with the intention that the detonation will spread deadly radioactive material over a wide area. In the episode, a team of domestic terrorists hijacks a truck carrying canisters of cesium 137, a radioactive isotope. A breakthrough in the FBI's investigation leads to a raid on the criminals' hideout, and three members of the team are taken into custody. Unfortunately, the truck, the radioactive material, and at least one coconspirator remain at large, and the men in custody brazenly threaten that if they are not released, the bomb they claim to have assembled will be set off in Los Angeles.

Don and his FBI colleagues use conventional interrogation methods, separating the three suspects and trying to get each of them to reveal the location of the truck in return for a plea bargain deal. But the three have another idea: Release them first, and

then

they'll reveal where to find the truck. Don seeks Charlie's help to resolve the stalemate.

Charlie sees a way to use a classic mathematics problem, the “prisoner's dilemma,” from the branch of mathematics called game theory. Charlie explains the problem in its standard form, involving just two prisoners:

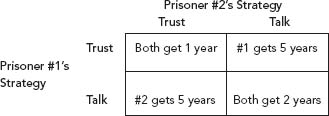

Say two people were to commit a crime. If neither talks, they each get a year. If one talks, he gets no time, the other does five years. If both talk, they both get two years.

A possible rationale for the scenario is this: If only one of the prisoners talks, he will go free as a reward for his promise to testify at the trial of the other prisoner, who will receive the full five-year sentence upon conviction. If neither talks, successful prosecution will be more difficult, and the defense lawyers will plea-bargain for one-year sentences. If both prisoners talk, they will both get a sentence of two years, rather than five, for their cooperation, which avoids a trial.

*

This scenario poses a major dilemma. The worst overall outcome for both prisoners is to talk; if they do, they both get two years. So it would seem sensible for each to stay quiet, and serve a year. But if you were one of the prisoners, having reasoned that it is better to stay quiet and serve one year, why not change your mind at the last moment and rat on your partner, thereby getting off scot-free? Seems like a smart move, right? In fact, it would be dumb not to do that. The trouble is, your partner will surely reason likewise, and the result is you both end up spending two years in prison. The more you try to puzzle it out, the more you find yourself going around in circles. In the end, you have to give up, resigned to having no alternative than to pursue the very action that you both know leads to a worse outcome.

If you are still unconvinced that your dilemma is truly hopeless, read on. Like Charlie, we'll look at the problem mathematically and derive a concrete answer.

HOW MATHEMATICIANS DEFINE A GAME

The theory of games became a mathematical discipline with the publication in 1944 of the book

The Theory of Games and Economic Behavior

by John von Neumann and Oskar Morgenstern. Their way of defining the game Charlie is describing is in terms of a payoff matrix, like this:

Note that in each case where one prisoner talks and the other doesn't, the one who talks goes free while his trusting partner gets five years.

Now let's see if we can figure out what is the best strategy for prisoner #1. (The analysis for #2 is exactly the same.)

A strategy is called “dominated” if it yields worse results than another strategy no matter what the other player does. If one strategy is dominated, then the other strategy would have to be a better choiceâright? Let's see.

If you are prisoner #1, you always do better by talking than trusting. Assuming your partner talks, you get two years rather than five years; assuming your partner trusts you, you go free rather than get one year. So “trust” is a dominated strategy, and “talk” is a better choice for youâno matter what the other does! (Game theory assumes that players are both rational and selfish, and that the payoff matrix is the whole story. So unless the payoffs incorporate “cost of selling out my fellow prisoner” in some wayâwhich they couldâthe reasoning just given is airtight.)

But wait, there's more. Notice that if both prisoners use the best strategy, the result is that both serve two years, whereas if they both used the inferior strategy, “trust,” the result is actually betterâboth serve only one year. Aha! So what is best for the players individually is

not

best for them collectively. The phenomenon that game theorists call cooperation is at work here.

If

the prisoners cooperate with each other, and trust each other not to talk,

then

they will get the best possible outcome.

This seeming paradoxâthe conflict between rational self-interest and what can be achieved through cooperationâhad a powerful influence on the development of game theory in the second half of the twentieth century. The prisoner's dilemma itself was first proposed by two mathematicians, Merrill Flood and Melvin Dresher, at the RAND Corporation, a government think tank that pioneered the application of mathematical methods to U.S. government strategy. Game theory was an important tool for military strategists during the cold war, and as we shall see, it is still important in mathematical analyses of strategies in the war against terrorism.

The mathematician John Nash, whose mathematical brilliance and struggle with mental illness were both dramatized in the award-winning film

A Beautiful Mind,

won a Nobel Prize in Economics for the breakthrough in game theory he achieved while earning his Ph.D. in mathematics at Princeton University. His theory, concerning what are now called Nash equilibria, is about “unregrettable” strategiesâthat is, combinations of strategy choices by individual players that no player can ever regret and say, “I could have done better if I'd used strategy X instead.” For any game with two or more players, each having a finite list of possible strategies, Nash proved that there will be at least one such equilibriumâat least one combination of strategies for the players that is stable in the sense that no player can obtain a higher payoff by changing strategy if no one else changes.

Nash's idea was that in a game in which all players are rational and selfish, trying only to maximize the payoff to themselves, the only possible stable outcomes are these equilibria, since all other combinations of strategy choices by the players will offer at least one player a potentially greater payoff by changing strategy. Often these equilibria involve what game theorists call “mixed strategies,” in which each player is allowed to use more than one of the strategies in their list (the so-called pure strategies), provided they assign a probability to each one and select a pure strategy at random according to those probabilities. In the battle of wits (“game of strategy”) between a pitcher and a batter in baseball, for example, the pitcher might choose among the pure strategies of fastball, curveball, and change-up, with probabilities of 60 percent, 33 percent, and 7 percent in order to keep the batter guessing.

For the payoff matrix shown above for the prisoner's dilemma, there is only one combination of strategies that yields a Nash equilibrium, and that is a combination of two pure strategiesâboth prisoners choose “talk.” If either prisoner departs from that strategy without the other changing strategy, then that departure causes an increase in their sentence, from two years to five. But if

both

change strategies, then they both improve their payoff, reducing their sentences from two years to one.

PLAY IT AGAIN, SAM

Prisoner's dilemma and other similar paradoxes helped spur the development of more general mathematical formulations, such as the notion of two players repeatedly playing the same game, which offers the possibility that the players will learn to trust each other by improving their payoffs. This leads to interesting possibilities, and in a famous experiment conducted around 1980, Robert Axelrod, a mathematical political scientist at the University of Michigan, organized a tournament by inviting colleagues around the world to write computer programs that would play sequences of prisoner's dilemma games against each other without any communication of intentions or “deal-making.” Each entrant's program could rely only on how its opponent's program was playing the game.

The winner of the prisoner's dilemma tournament was determined by simply keeping score: What was the average payoff won by each program against all other programs? The surprising winner was a program called “Tit for Tat,” written by Anatol Rapoport. The simplest of all of the programs entered, it behaved according to the following rule: Choose “trust” in the first game, and in later games choose whatever strategy the other player chose in the game before. This program is neither too niceâit will immediately punish the other player for choosing “talk”ânor too aggressive, since it will cooperate as long as the other player is cooperating. Even without the luxury of communication between the players, the Tit for Tat strategy seems to attract other computerized “players” to play the same way that it plays, leading to the best possible outcome for both.

In the fictitious scenario depicted in

NUMB3RS

' “Dirty Bomb” episode, clearly there

was

prior communication among the three criminals, and evidently they agreed to tough it out if apprehended, believing that this attitude would force the FBI to release them in order to prevent a radiation catastrophe. Similar departures from the usual assumptions of game theory are used in ongoing efforts by mathematicians to analyze and predict the strategies of terrorists and to determine the best strategies to defend against them. One of the ways to apply other mathematical ideas to enhance game theory is actually the same method that Charlie used to break apart the team of criminals, which we look at next.

RISK ASSESSMENT

The idea behind risk assessment (sometimes called “risk analysis” or “risk management”) is that an individual or group confronted with possible losses can assign numerical values to those lossesâperhaps actual dollar costsâand, by considering for each loss both its cost and its probability of occurring, determine the expected loss or risk it represents. They can then consider courses of action that reduce the risks, though the actions might incur some costs, too. The overall goal is to find the best combination of actions to minimize the overall costâthe cost of the actions plus the risks remaining after the actions are taken.

Among the earliest applications of risk assessment were the calculations made by insurance companies to determine how much money they should expect to pay in claims each year and the probability that the total claims will exceed financial reserves. Likewise, many companies and government agencies perform mathematical assessments of risks of various kinds, including natural disasters such as catastrophic accidents, fires, floods, and earthquakes, and take actions such as buying insurance and installing safety equipment to reduce those risks in a cost-effective manner.

Risk assessments can be made in the criminal justice system, too, and they are routinely made by defendants, their lawyers, and prosecutors, albeit usually without the benefit of actual mathematics. What Charlie realizes when confronted with the FBI's version of the prisoner's dilemmaâhow to crack the solidarity of the “nobody talks” strategy of the criminals in custodyâis that their shared strategy subjects the three of them to very unequal risks. When Don laments that none of them show any willingness to talk, Charlie responds, “Maybe that's because none of them realizes how much the others have to lose.”

Charlie convinces Don to try a different approach: Bring the three men into one room and give them a mathematical assessment of their individual risks (in the game-theoretic sense) in going to prison. Since each of them hasâin one way or anotherâa non-negligible probability of going to prison for their participation in the dirty bomb plot, Charlie wants to show them how different the consequences would be for them individually.

Although Charlie is intimidated by facing these menâa group not at all like his usual audience of eager CalSci studentsâhe bravely goes ahead, mumbling, “What I'm going to do today, mathematically, is construct a risk assessment for each of you. Basically quantify, if I can, the various choices you face and their respective consequences.”